Content

Data Tokenization

What is Data Tokenization?

Data Tokenization is a format-preserving, reversible data masking technique useful for de-identifying sensitive data (such as PII) at-rest. As data tokenization preserves data formats, the de-identified data can be stored as-is in data stores.

Applications that do not require the original value can use the tokenized value as-is; applications authorized to access the original value can retrieve the original value from the token for further use.

Why should I care about Tokenization?

Tokenizing your data early, during data generation or ingestion, removes the risk of exposing sensitive data if a data breach occurs. This significantly reduces the reputational risk and remediation cost, as the data contains no sensitive or PII information.

Tokenization is useful to maintain compliance with ever-increasing data privacy regulations such as GDPR, Schrems II, PCI-DSS, HIPAA, and CCPA.

Tokenization also enables you to share data seamlessly with 3rd parties and across geographies.

What are the various methods of tokenization?

There are two approaches for tokenization: Vaulted and Vaultless.

Vaulted tokenization generates a token in the same format as the original. It stores the mapping between the original value (in encrypted form) and its token in a secondary database for reversibility. This enables retrieval of the original value.

However, due to the secondary database, the vaulted approach has performance and scalability drawbacks with increasing data volume. Further, the secondary database incurs additional infrastructure and management costs.

Vaultless tokenization, the current state-of-the-art, uses a format-preserving encryption (FPE) algorithm with a symmetric key to tokenize data. Detokenization is simply a decryption operation performed with the same symmetric key. Two FPE algorithms, AES FF1, and FF3-1, are currently approved by NIST. FF1 is considered more mature and has greater adoption than FF3-1.

Fortanix supports Vaultless tokenization using the NIST-standard FF1 algorithm.

What is the advantage of Tokenization over Database encryption?

Tokenization is format-preserving and portable. This means that data can be tokenized (encrypted with FF1) once upon generation or ingestion and then be copied internally or shared externally as needed.

Most applications that do not need access to sensitive fields can use the tokenized data as-is. However, the small set of applications that might need access to sensitive fields can decrypt the tokenized data on the fly to obtain the original values.

As database encryption is not format-preserving, such data must be decrypted and masked on each read operation. Further, you will need to decrypt and re-encrypt data as it moves from one data store to the next: from a transactional database to an analytical database.

Tokenization requires some upfront low-code development in the application or in an ETL pipeline node to tokenize the data. Still, the low initial effort provides greater ongoing benefits than database encryption.

What is the optimal point in the data lifecycle to tokenize sensitive data?

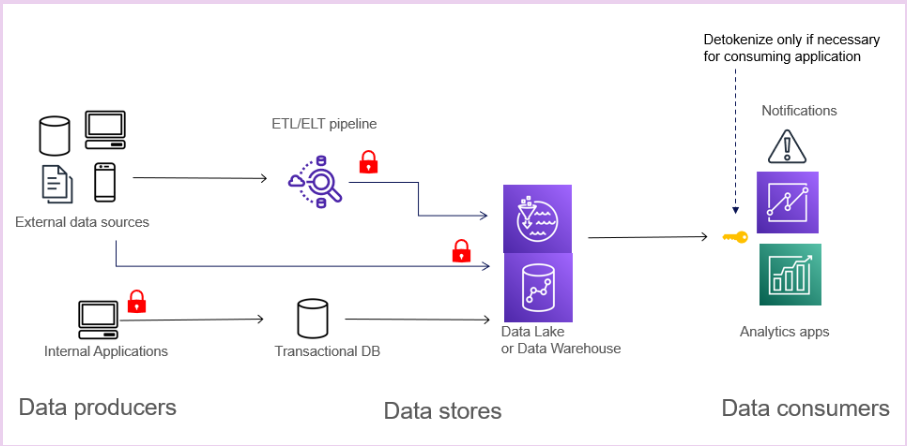

Perform tokenization early, ideally at the data generation or data ingestion stage. Tokenizing in the generating application provides the highest security and performance. However, this can be difficult to govern compliance as the number of applications increases. Tokenization performed in the ETL/ELT pipeline during streaming or batch ingestion performs well and is easy to control for compliance. It can be implemented without having to update the data-generating applications.

Another option is to tokenize at the time of data store writes with User Defined Functions supported by the data store. This is a transparent approach and easy to govern; however, it may impact performance for low-latency transactional databases.

What is Card Tokenization?

Tokenization protects sensitive payment information like credit and debit card details. It involves replacing the original sensitive data, such as the 16-digit card number, cardholder name, expiration date, and security code, with a unique substitute known as a "token."

The cardholder data is transformed into a series of random numbers with no meaningful correlation to the original information. This makes the tokenized data meaningless to anyone who might gain unauthorized access. Tokenization operates similarly to encryption, which converts data into an unreadable form, but with one key distinction: tokenization is irreversible. Once data is tokenized, it cannot be converted back to its original form.

Tokenization is especially useful when sensitive data needs to be stored, like recurring payments or merchant-initiated transactions. It is used to speed up the checkout process by allowing merchants to retain a token for a customer's card, reducing the need for customers to input their information every time they make a purchase.

One of the significant advantages of tokenization is its compliance with security standards such as the Payment Card Industry Data Security Standard (PCI DSS). Tokenized credit card data can be stored within an organization's secure environment without violating these standards.

Because tokenization maintains the original format and length of the data, businesses can continue using the tokenized data in their existing processes without significant disruptions.

The security benefits of tokenization are clear: it adds an extra layer of protection for consumers' card information because the merchant does not retain the card details. While tokenization is not obligatory, it is highly recommended as a essential service to safeguard consumer data during payment transactions.

How does card tokenization work?

The process involves several steps to ensure security and usability:

Data Collection: At stage 1, a customer fills in the details of their payment card, and the merchant collects this sensitive data, including the 16-digit card number, cardholder name, expiration date, and security code (CVV/CVC).

Token Generation: The collected card data is then forwarded to a tokenization service provided by a trusted third party or managed by the merchant's payment gateway. This service generates a token that is unique to that specific card data.

Token Storage: The token is then stored in the merchant's system or database. At the same time, the original sensitive card data is immediately discarded or stored in a highly secure, compliant-friendly system.

Transmission: The token is returned to the merchant and can be used for future transactions. If unauthorized users gain access to the database, they cannot reverse-engineer the original card data from the token.

Transactions: The merchant uses the token instead of the card data for subsequent transactions. The token is sent through the payment process like the original card data.

Authorization: The payment processor or payment gateway receives the token and uses it to request authorization from the card issuer. When the card issuer recognizes the token, the payment processor processes the transaction as the actual card data.

Decryption and Payment: The payment network decrypts the token and uses the original card data to complete the transaction. The merchant receives payment approval without direct access to the real card details.

How does tokenization make online payments more secure?

Here's how tokenization makes online payments more secure compared to using your credit card directly:

Data Exposure:

Credit Card: There's a risk that the number, expiration date, and CVV can be exposed to the merchant. This data can be stored insecurely and intercepted during transmission, leading to potential breaches.

Tokenization: It replaces sensitive card data with a random token, and the actual card information is not transmitted or stored, significantly reducing the risk of data exposure.

Randomization:

Credit Card: A credit card number follows a predictable pattern based on the card issuer. Attackers can exploit this predictability and can guess the card numbers.

Tokenization: Tokens are random and have no inherent pattern, which makes it extremely difficult for attackers to guess or reverse-engineer the original data from the token.

Dynamic Tokens:

Credit Card: Unauthorized users can misuse card data for payment transactions because authorization is not required.

Tokenization: Dynamic tokens are valid only for specific transactions or within limited time frames. This prevents unauthorized reuse of intercepted tokens.

Centralized Security:

Credit Card: When merchants manage credit card data directly, it can lead to varying levels of protection that are not up to regulatory standards.

Tokenization: Payment processors or dedicated tokenization services offer centralized security expertise, potentially offering higher levels of protection.

Compliance:

Credit Card: Merchants cannot comply with strict PCI DSS compliance standards when storing actual credit card data

Tokenization: Since actual cardholder data is not stored, it meets the requirements of all compliances.

What is network tokenization?

Network tokenization is a payment security approach provided by networks like Mastercard, Visa, American Express, Maestro, Rupay, Union Pay, Discover, etc. It replaces sensitive card data like primary account numbers (PANs) with unique tokens to enhance security during transactions.

The card brands generate these tokens and constantly update them. Even if a physical debit or credit card is locked due to suspected fraud, the network token ensures that the user's payment credentials remain up to date in real time. As a result, customers encounter fewer instances where transactions are declined due to outdated information. This supports user satisfaction during recurring transactions. Merchants get increased security, reduced declines, cost savings, and improved checkout experiences.

Unlike PCI tokenization, which replaces PANs at specific points, network tokenization affects the entire payment process. Network tokens are domain-specific and tied to a single device, merchant, channel, or transaction type. This means they are limited to specific devices, merchants, channels, or types of transactions.