Authors:

- Rajive Chitajallu, Technology Fellow at Goldman Sachs

- George Wu, VP Engineering at Goldman Sachs

- Bruno Alvisio, Senior Software Engineer at Fortanix

- Arvind Tripathy, Solution Architect at Fortanix

Hadoop continues to be the catalyst for big data analytics. With the acceleration of cloud computing, Hadoop application data is increasingly shipping or overflowing beyond the corporate data center in new formats like Parquet, Orc etc.

IT organizations looking for either long-term archival or scaling into hybrid analytical platforms will need to consider privacy, compliance, and risk mitigation in the face of data security.

Public cloud platforms allow Enterprise customers to protect their most sensitive bits, or the entirety of their big data set in many ways. Server-Side Encryption (SSE) has been the most convenient way to turn on this protection, but it forces the encryption keys to remain in the cloud.

Client-Side Encryption (CSE) and Format-Preserving Encryption (FPE or Tokenization) are critical enablers of data protection that organizations can use to retain full control over their cloud-resident data and ensuring an optimum level of protection suited to the project.

While FPE offers a fast way to protect structured data and apply governance around its visibility, it is not suitable for unstructured, semi-structured or big data and may also require an overhaul in peripheral systems consuming the tokenized data. Fortanix offers prebuilt turnkey integrations with Snowflake for secure data sharing using FPE.

Amazon Web Services (AWS) and Amazon S3 offer several ways to protect data:

- SSE-S3: encryption keys are generated and managed opaquely by Amazon.

- SSE-KMS: encryption keys are generated in Amazon Cloud KMS, and/or managed by the customer.

- SSE-C: encryption keys are generated and managed by the customer but presented to Amazon S3.

- CSE-KMS: encryption keys are generated and/or managed in Amazon Cloud KMS by the customer.

- CSE-C: encryption keys are generated and managed by the customer in a proprietary HSM (or KMS).

In all the above options except CSE-C, AWS or Amazon S3 is aware of the cryptographic key material during data transfer. Hence, CSE-C is the approach discussed below.

Overview

The Hadoop open-source project recently introduced CSE-KMS on the current snapshot 3.3 release. With Fortanix, Hadoop data can now get encrypted enroute to the cloud.

DistCp (a commonly used Hadoop MapReduce application, and part of the Hadoop-AWS project) is fully integrated with Cloud Object Storage services, like Amazon S3 such that HDFS data can be distributed beyond Hadoop data nodes using the S3A:// filesystem.

Fortanix has further integrated its Hardware Security Module (HSM) into the Hadoop-AWS project, to ensure data or content is encrypted locally on the Hadoop nodes and copied on to Amazon S3 buckets. Fortanix makes it seamless to protect data using root encryption keys inside Fortanix Data Security Manager (DSM).

Applications ranging from classic MapReduce, to Hive, Presto or Spark, can interoperate with encrypted data, while running either on-premises or in the cloud.

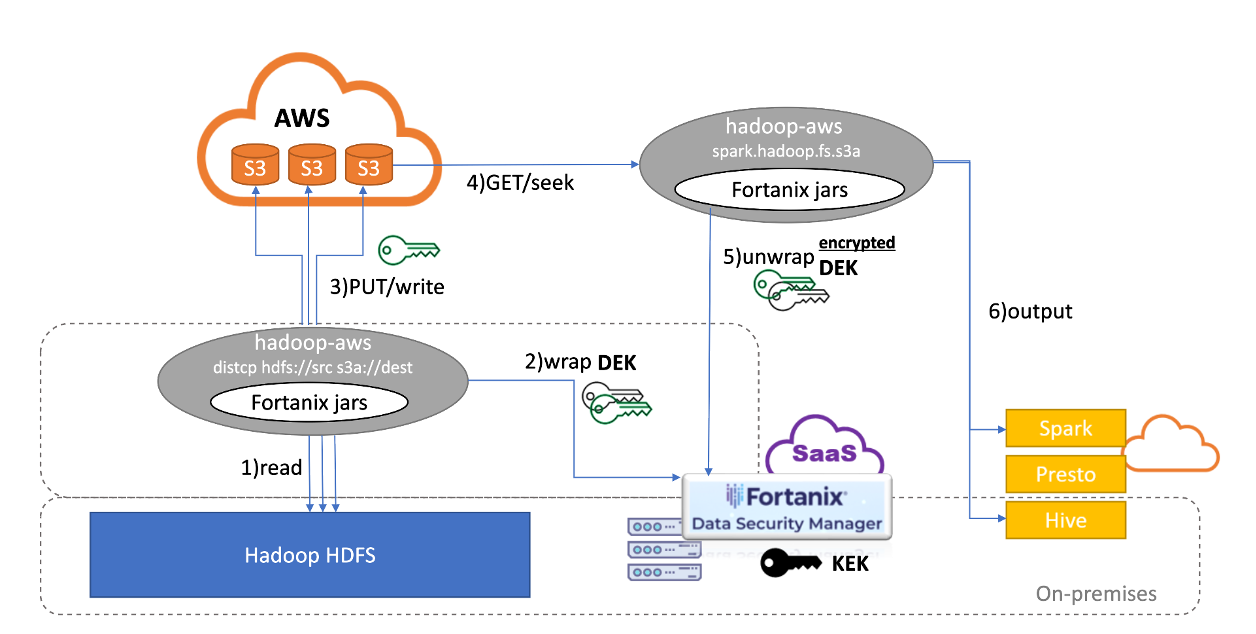

The illustration depicts the cryptographic posture of the Fortanix integration with AWS SDK (Software Development Kit) and Hadoop. The six steps highlighted can be categorized into two primary flows:

Content Upload:

1. Reading files from HDFS through DistCp

2. Using Fortanix jars per configuration to generate DEKs and wrap them inside Fortanix DSM

3. Uploading encrypted files and encrypted DEKs to Amazon S3

Content Download:

4. Read files using a Hadoop or Spark program

5. Using Fortanix jars to unwrap DEKs inside Fortanix DSM

6. Present sensitive data to application for analysis

Security

The goal is to ensure the root encryption keys are fully outside the public cloud. Besides the root or key encryption keys (KEK, in the illustration above) that are protected inside a private cluster of NIST-certified FIPS 140-2 Level 3 HSM appliances running Fortanix DSM, the permissions that govern role-based access controls (RBAC) for various users and/or applications, are also kept inside Fortanix DSM.

An administrator in Fortanix DSM generates one or more KEK(s). Through a consensus mechanism she authorizes a group of users and/or applications to the use of KEK, which are never exportable from the Fortanix DSM cluster hosted on-premises, in the cloud, or from DSM SaaS (Software-as-a-Service).

It is easy to configure CSE-C with Fortanix for AWS SDK and Hadoop. The KEK is configured in the Hadoop cluster for all Amazon S3 operations or individual buckets. Access credentials for Fortanix DSM can be statically configured or sourced from an Identity Provider (IdP) using an established Credential Provider interface. Client certificates or JSON Web Tokens (JWT) serve as dynamic credentials authorizing the Hadoop nodes to perform cryptographic operations against Fortanix DSM.

Fortanix DSM is also used for sourcing entropy during generation of unique content encryption or data encryption keys (DEK, as illustrated above) corresponding to each uploaded Amazon S3 object. Inside Fortanix DSM, the KEK wraps the content encryption keys using Authenticated Encryption mode i.e., AES (Advanced Encryption Standard) 256-bit encryption under Galois/Counter Mode (AES-GCM).

The raw and wrapped content encryption keys (also referred to as Cipher Lite keys as per AWS SDK) are returned by Fortanix DSM to the respective Hadoop nodes that initiated the calls through AWS SDK, at which point the content encryption is performed locally i.e., on the Client-Side.

Such encryption operations are repeated on a sequentially basis for each block during a multi-part upload of a large file, or atomically on smaller HDFS files. Note that the file size threshold for multi-part uploads can be configured in Hadoop along with the chunk size.

Neither Hadoop nor the AWS SDK retain or persist any information besides the wrapped content encryption keys, which are stored on Amazon S3 against the object Metadata or in Instruction Files, if so configured.

Note, any encryption modes other than Authenticated or Strict Authenticated will not be allowed by AWS SDK, as EncryptionClientV2 prevents it. However, content decryption can be performed using AES-CTR as required by a range get or partial seek operation against a large Amazon S3 object.

Once operational, it is imperative to also enable key rotation or rekeying of the root encryption keys. Content encryption keys are automatically regenerated when the Amazon S3 object is overwritten.

Fortanix DSM makes it easy to tightly control this automation either on-demand as often needed or on a scheduled basis. Fortanix DSM offers unique governance capabilities through its Quorum Policies feature that coordinate approval workflows within the Enterprise organization, for rotation or other sensitive key operations. Prior versions of the root encryption keys can be revoked to only allow decrypt operations or disabled entirely as suited to the data protection or compliance policy.

Performance

Hadoop data movement needs a higher bandwidth than running applications against the cluster. An organization-wide archival initiative for cloud migration will require planning to ensure this bandwidth exists between the corporate and AWS datacenters.

There are four aspects of performance that need to be considered when adopting CSE-C for a cloud migration project:

Content Size

Inarguable, the data volume will have a proportional impact on the time taken to upload data to Amazon S3. In the case of CSE, since only the encrypted content is stored on Amazon S3 and the metadata is a fixed set of key-value pairs, the difference in upload size will stem from the encryption.

The cipher text produced by the local content encryption by the DEK is insignificantly larger than the original plain text. Since AES-GCM enables confidentiality, integrity, and authentication, the latter requires a 16-byte tag to be appended to each cipher text. Thereby, the bandwidth requirement for uploading CSE-C protected data is expected to be marginally more than that of the equivalent for the raw original data.

Total Number of Files

Every HDFS file is an Amazon S3 object. Thereby there is a one-for-one (1-1) mapping of a DEK and an S3 object or HDFS file. Since the number of encryption operations will scale linearly with the number of files in the DistCp job, this aligns well with the linear capacity models offered by Fortanix DSM clusters or DSM SaaS.

Average Size of the File

A DistCp job could include ten million (10^7) small files, say averaging 125Mb each, resulting in a 1.25Pb (petabyte) upload. Such a job will be quicker than a DistCp job with a hundred thousand (100,000) files average 10Gb each, resulting in an upload of 1Pb, or 7.8 million chunks of 128Mb blocks.

As Hadoop distributes the blocks of a large file, and CSE-C needs a sequential upload of all the blocks, the multi-part operations tend to be slower than atomic operations. Thereby, the cloud migration initiative can be perfected by structuring the relevant content on appropriately sized and partitioned Hadoop clusters.

Hadoop Cluster Size

A DistCp job spawns several mappers and distributes them to available Hadoop nodes. Each map task starts a TLS (Transport Layer Security) session with Fortanix DSM and reuses it until renewal is needed. Depending on the volume of the DistCp job, and the number of participating Hadoop nodes, the number of TLS sessions will vary.

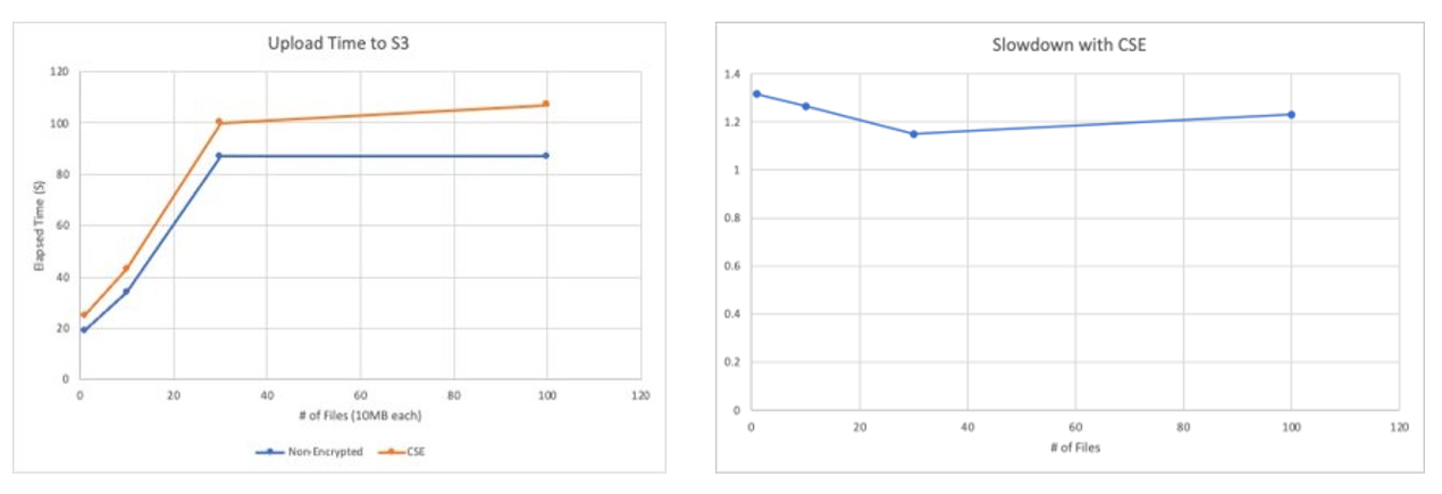

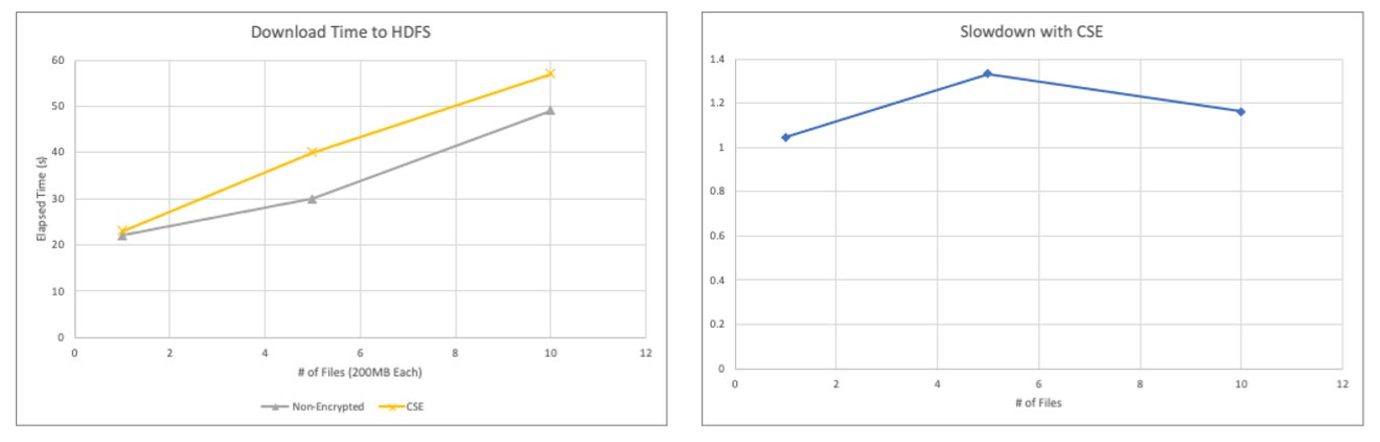

Fortanix has designed and simulated real-world Amazon S3 workloads against big data moving from on-premises to Amazon S3. The above charts suggest around 25% overhead during cloud storage operations with CSE-C enabled, depending on the factors discussed above.

While this could be idiosyncratic to the tests conducted by Fortanix, this observation correlates across different file sizes and volumes by varying several aspects of the DistCp job. With improvements to the AWS SDK and more efficient load staging the performance of the CSE-C enabled operations can be further improved. Cloud administrators will need to consider this trade-off between security and performance.

Summary

Data scientists and cloud administrators are now armed with another valuable tool in their arsenal and may forge ahead knowing that the underlying data is secure at-rest, with keys under the auspices of the rightful data owners.

This freedom to move data without compromising on its security will be important for organizations that use multiple cloud platforms. Unwarranted data exfiltration will no longer be a concern with centralized governance, and simplicity of audits against the data or metadata will surely make compliance with local and regional laws easier.

Whether it is governmental agencies like Georgia State, or sports teams like Seattle Hawks, moving data into cloud object storage for analytics will find cloud security a critical enabler of confidentiality and trust within the business operations. To take advantage of this, Fortanix has open-sourced the Amazon S3 CSE-C implementation against the AWS SDK for Java on GitHub.com here.

Advantages of CSE-C with Fortanix:

- Total control by data owners over residency of and permissions granted to the encryption keys.

- Separation between the cloud service provider, vendor organization, and data owners.

- Consent for decryption can be obtained through a quorum workflow before consumption.

- Tamper-proof audit of all usage and management of the root keys inside an NIST certified HSM, or in an HSM service, that is ISO27001 and SOC-II compliant.

- Streamlined logs that can be correlated and exported for review with regulators.

- Extensibility of the architecture leads to secure data sharing across clouds and regions.

To further improve the security posture data scientists can also adopt protection in-use to secure the runtime in-memory footprint of the data loaded by machine-learning (ML) or artificial intelligence (AI) algorithms.

Fortanix offers a platform called Confidential Artificial Intelligence (CAI) in conjunction with Fortanix DSM, through which privacy-preserving analytics can be achieved at-scale on commodity hardware between multiple parties with varying degrees of mutual trust.

Cite this article

Cite this article