The launch of the ChatGPT natural language model by OpenAI last November has generate enormous interest and controversy with the field of artificial intelligence (AI) and mainstream media.

The earlier release by OpenAI of their DALL-E 2 image generation system was no less controversial, as people considered the limits of AI creativity and the prospect of talented human artists around the world being, somehow, supplanted by a robot artist pumping out extraordinary visual works from a continuously evolving virtual studio.

Don’t throw your pastels and brushes out yet, AI systems lack a quintessentially human characteristic – imagination. Human creativity will always exceed that of a programmatic machine, that’s why we came up with the technology to build them, but we will avoid that rabbit-hole for the time being…

At Fortanix, we provide state-of-the-art data security solutions for customers developing and implementing AI systems. We are also actively involved with emerging Web 3.0 technology, and I will be reporting on my participation in the Plumia internet country project shortly.

Prior to joining the first Plumia cohort, in November 2022, the team at SafetyWing promoted a competition to visualize a future internet country using the DALL-E 2 API (Application Programming Interface) to generate an image, based on a descriptive text input.

Details of how DALL-E 2 works can be found via the provided FAQ page at: https://dallery.gallery/dall-e-ai-guide-faq/.

DALL-E 2 is fun to play with, but my colleagues and I started to think about how we could use the power of confidential computing to support our entry to the competition.

We wanted to enable different team members to contribute their views, without any anxiety about whether they held an opinion that diverged from other members of the team. To do this we wrote a simple application in Python that collected individual text responses to the question “what would a future internet country look like?”

The application was containerized and deployed by Fortanix technology to a secure Trusted Execution Environment (TEE), using Intel® Software Guard Extensions (Intel SGX) and Microsoft Azure Confidential Computing infrastructure in the public cloud.

Since the application code and received data was encrypted in memory, with no access from outside the TEE, the different views expressed by the team members were aggregated in an anonymized and secure manner. Hence the aggregate opinion of the group was compiled without it being possible to resolve who had contributed which idea.

The aggregate text formed from the team’s inputs by the secure application could have been used as the input to the DALL-E 2 image generator.

However, we wanted to add some additional functionality to the application, which is made possible by the fact the Confidential Computing permits any arbitrary application functionality within the boundary of the TEE – there are not the limitations to performance or complexity that are currently associated with cryptographic methods of privacy-preserving computation.

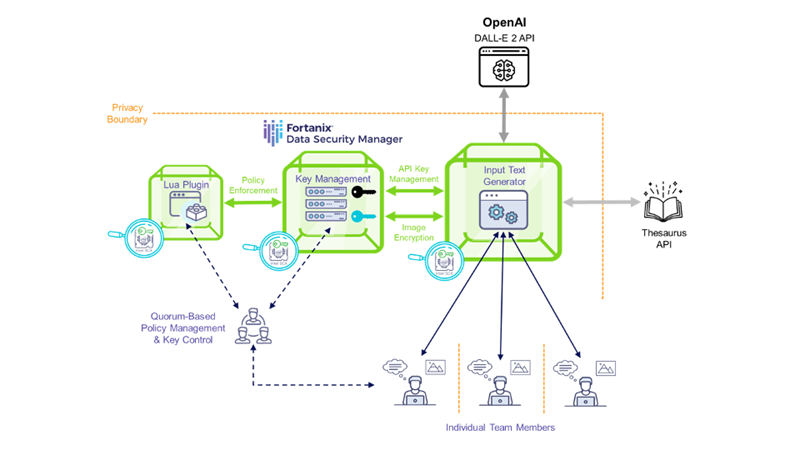

Based on the composite text formed from our human inputs, we introduced two additional steps to create our input to the DALL-E 2 system. First, we filtered keywords and phrases, ranked keywords by occurrence within the aggregate text, and then queried a public thesaurus API to “boost” our collective focus with additional synonyms related to our keywords list.

Synonyms returned by the online thesaurus were ranked in common with their keyword stimulus and we then deduplicated the final keyword list. Keywords were integrated into the aggregate text as a simple synonym string, alongside their keyword stimulus.

The second step we took was to apply a policy to the aggregate text to ensure that the resultant input to DALL-E 2 was compatible with the specified API request parameters. The policy was enforced via a REST API call to a Plugin application protected by the Fortanix Data Security Manager™ solution.

The Plugin evaluated aggregate text and applied a set of rules to create the final text for use with DALL-E 2. The principal effect of the policy was to restrict the aggregate text size, with the additional thesaurus words, to the maximum size of 1,000 characters.

For the purposes of our experiments with DALL-E 2 as part of the Plumia competition, we provided basic inputs to our secure input generator application.

In practice, however, the policy controls we implemented could be used to prevent the incorporation of harmful content and ensure compliance with the policies applying to use of DALL-E 2, or any other AI image generation service.

Fortanix DSM also provided robust security for the API key required to authenticate requests to DALL-E 2. Using Intel® SGX to protect the API key in use, applying quorum-based control of the cryptographic secret, Fortanix DSM validated requests for use of the API key using a unique certificate for our secured input generator application.

The certificate was automatically generated at runtime through Fortanix Node Agent™ technology, using the attestation report of the Intel® SGX TEE. Attestation is a fundamental property of Confidential Computing that is used to check the security in place and the integrity of deployed applications before any sensitive data is processed.

For those with a technical interest, details of attestation methods for Intel® SGX are provided here: Attestation Services for Intel® Software Guard Extensions.

The figure, below, provides an overview of the system we developed, and we will discuss the relevance of the “privacy boundary” a due course:

Using our system, we provided several rounds of text inputs, with the resultant, ephemeral, input to DALL-E 2 being masked from the participating team members.

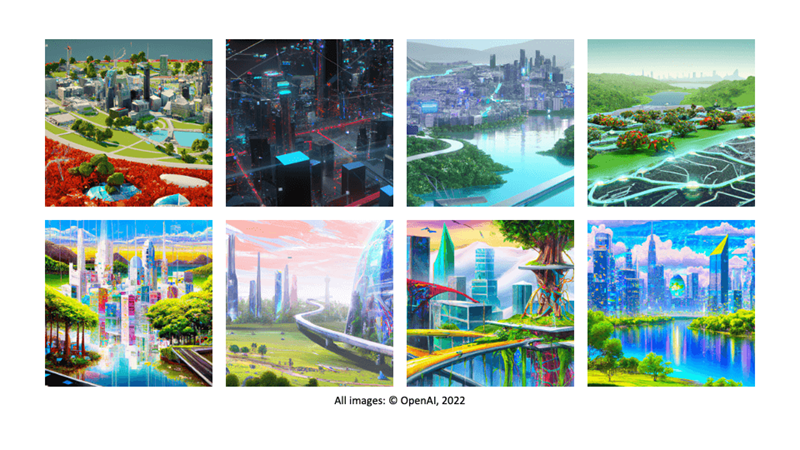

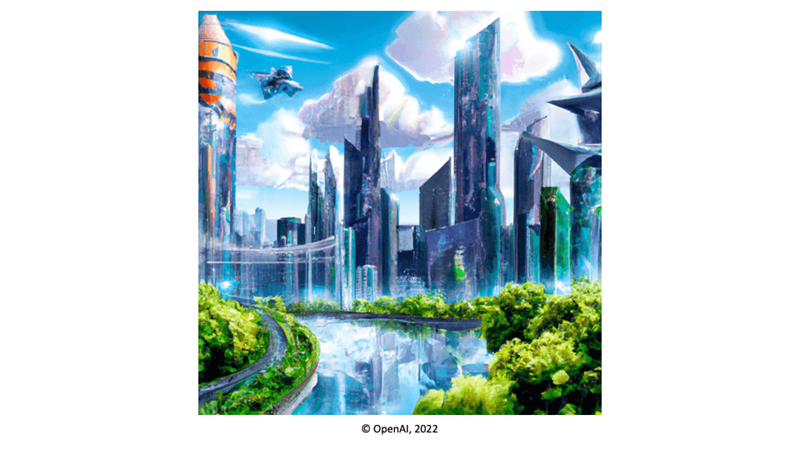

A collage created from just a small number of generated outputs from DALL-E 2 is provided below, along with the single image that we eventually selected for submission to the Plumia competition:

I think you will agree, there are some interesting images that were created by the AI system from the combined imaginations of a diverse and creative group of Fortanix engineers. We were happy with the results and the seamless interaction between the secured input generator application with both the DALL-E 2 API and Fortanix DSM.

Once again, Confidential Computing demonstrated its inherent flexibility and security for a specific, yet easily transferrable, use-case in the AI domain. On the output side, the 1,024 × 1,024-pixel images returned from the DALL-E 2 API were encrypted within the TEE using Fortanix DSM, with access to decrypted image being restricted to members of the team.

In this way, the entire lifecycle was secured, from origination of the raw text inputs, through to the generated image representing our collective view of a future internet country.

Returning to the privacy boundary that I marked on the overview of our solution: The emergence of centralized AI services like DALL-E 2, ChatGPT, and others provides significant benefits to users in terms of the continuous learning of new, more effective, models in respect of the intended computational task and the ability of people to overcome barriers to knowledge and experience – I could never sketch or paint any of the images we generated by hand.

Input data can inform an AI system as to the success of its outputs and capture of received input data represents a valuable resource for the designers of other AI systems.

Based on our solution architecture, the privacy of the human users is protected within the team, since no party needs to have knowledge of the other parties or their expressed input, and the privacy of the team is protected with respect to the centralized AI service, as the REST API request for an output image is generated by the secured application, leaving the identities of the various human users unobtainable.

Hence, any data captured by the centralized AI server can only be linked to the API key credential. Safe and secure exchange of data with cloud services and other centralized API endpoints is something we focus heavily on at Fortanix and there are some interesting questions that arise in respect of data ownership, individual consent, and jurisdictional control.

OpenAI touch on some of the considerations that are specific to the use of DALL-E 2 and the team worked to ensure that we operated within these constraints.

The API key was also protected by our solution architecture, to ensure no unauthorized use or any need to hardcode the API key within our deployed application.

The configurable quorum approval policies within Fortanix DSM and the flexibility of the Lua Plugin script used for policy enforcement over the aggregate input mean that users can exercise democratic control over functionality of input generator and adapt to changes in policy at OpenAI or at the team level.

The absence of any individual “super users” with access to the input text data and secure exchange of cryptographic secrets based on validated attestation of the secure TEE, created an encapsulated environment within which we could manage all of our interaction with the DALL-E 2 system.

We are only seeing the very first glimmers of light in the universe of AI-based systems and services. The importance of fair and ethical implementation of the AI systems we will depend upon in future is contingent of the twin pillars of data privacy and data security.

I look forward to providing regular updates on the work we carry out at Fortanix in this area and to further exploration of the considerations around use of AI models for both serious and light-hearted purposes.