I started writing about Secrets injection in a kubernetes environment for my own personal benefit, especially to provide a secure way of sharing secrets apps running on DOKS (link).

To my surprise, I wasn’t expecting over 50 views (and possibly thanks to Adrian Goins for his honest feedback on the blog post) in a week or so and I really appreciate all of the feedback that came through over the week as well (it’s my first 50 views as a newbie blog poster!).

Since I also use Azure in my “half-life” environment, I thought I’d also test out Fortanix’s secret injection on Microsoft AKS - Azure Kubernetes Service.

Fortanix promotes consistency as one of their major strengths across a multi-cloud environment, so I expect this to be somewhat similar to the config as DOKS.

One thing to note is that Microsoft Azure provides support for two different types of instances when provisioning your kubernetes cluster worker nodes:

-

The standard instance-based nodes: These are your typical instances depending on the workload.

-

The SGX enabled instance-based nodes: Specifically, the DCsv2-Series. These instances allow you to build and run secure enclave-based applications or containers.

For this blog, I will lightly touch on the DCsv2-Series worker nodes from a deployment of AKS perspective, but I’ll cover the use of DCsv2-Series worker nodes on a separate blog post in the coming days and its benefits.

Let’s start with AKS first.

Azure Kubernetes Setup

So once you have your Azure credits squared away (or if you don’t have an Azure account yet, you can sign up for a trial account with some free credits here: https://azure.microsoft.com/en-us/free/), we can start configuring our managed kubernetes service.

When you first login to your Azure portal, you’ll be greeted with a few services - including the “Kubernetes services”:

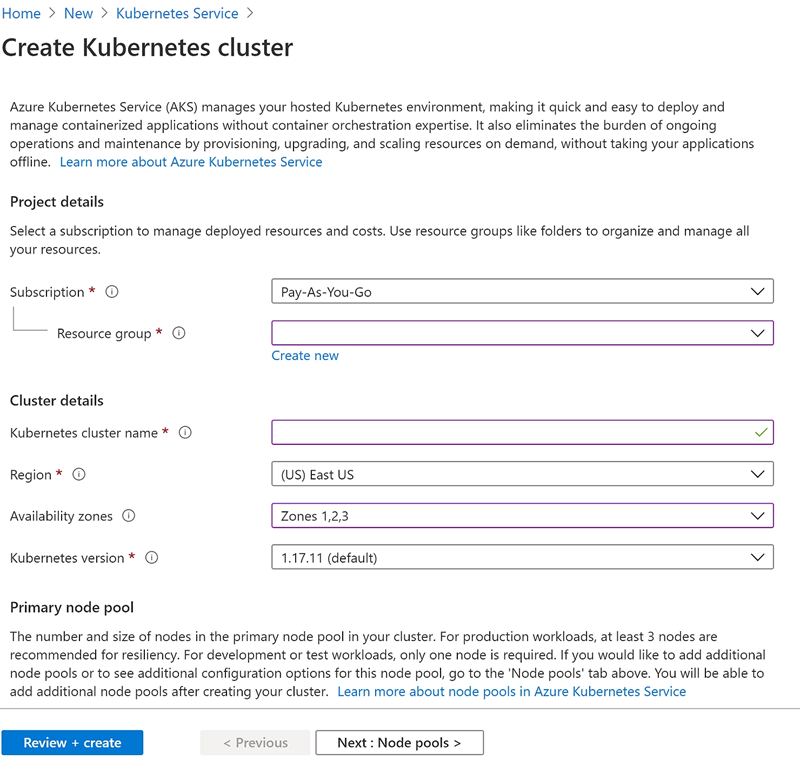

Once you click on the button, deploying kubernetes with AKS is fairly straight explanatory.

Start with giving your subscription details, resource group, name for your kubernetes cluster and some other details around AKS availability:

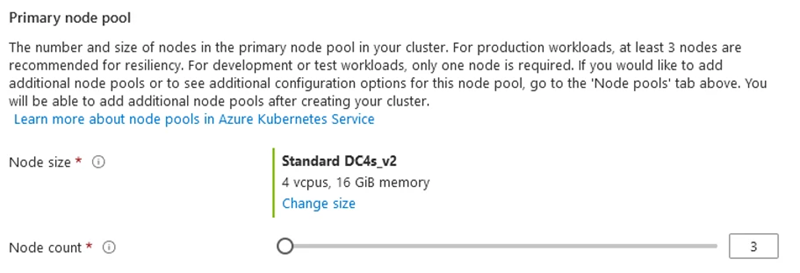

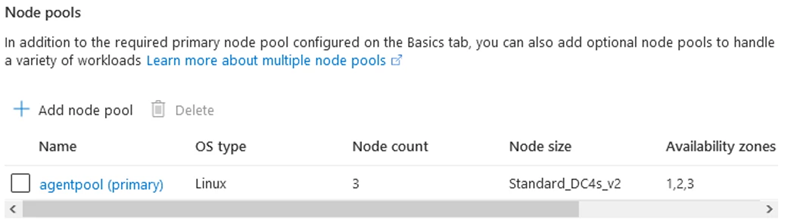

We’ll then need to select the type of worker nodes for your kubernetes cluster. I’ve chosen a DCsv2-Series instances for this example (due to the next blog post), but you can click on the “Change size” and modify this as well:

FYI: these are the only DCsv2-Series instances currently available in Azure:

Make sure you confirm the worker node pool through the review page (and change the name of the pool as you require):

And the rest is really around your networking, tags, and other bits and pieces you want to set for your kubernetes cluster. Wait for the service to start and your kubernetes cluster is up and running!

Now we need the kubernetes config file. I prefer to run the kubectl commands from my own WSL installation, but you can also run this natively within your Azure Cloud Shell (YMMV). To get the kubernetes config file auto-generated and copied on to your device, we still must go through the Azure Cloud Shell.

Fire up Azure CLI (most Windows 10 would have this pre-installed, but if you have not done so, hover over to https://docs.microsoft.com/en-us/cli/azure/install-azure-cli for a detailed guide on how to install Azure CLI). We will be running all of our commands via Azure CLI, but all of the files and commands are actually running in your Azure Cloud Shell.

Upon executing Azure CLI, you’ll be greeted with the following message:

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code XXXXXXXX to authenticate. This code will expire in 15 minutes.

You may also be asked to create a Azure Cloud Shell account online at this time, and as mentioned above that’s because Azure CLI will sync with your Azure Cloud Shell account. Once you type in the code in your browser:

Authenticated. Requesting a cloud shell instance... Succeeded. Requesting a terminal (this might take a while)... Welcome to Azure Cloud Shell Type "az" to use Azure CLI Type "help" to learn about Cloud Shell PS /home/ME>

Quickly set your subscription if you have not done so yet and also have the kubernetes config saved to your home directory on Azure Cloud Shell:

PS /home/ME> az account set --subscription <your_subscription> PS /home/ME> az aks get-credentials --resource-group <resource_group> --name <aks_cluster_name>

Make sure the kubernetes config file is generated in your Azure Cloud Shell:

PS /home/ME> dir .kube/config

Directory: /home/ME/.kube

Mode LastWriteTime Length Name

---- ------------- ------ ----

----- 11/04/2020 2:41 AM 9654 config

</div>

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-akstestpool-20706632-vmss000000 Ready agent 99m v1.17.11

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-869cb84759-95vmk 1/1 Running 0 99m

kube-system coredns-869cb84759-hhvtk 1/1 Running 0 98m

kube-system coredns-autoscaler-5b867494f-mqdwt 1/1 Running 0 99m

kube-system dashboard-metrics-scraper-5ddb5bf5c8-z2rzq 1/1 Running 0 99m

kube-system kube-proxy-r7t7k 1/1 Running 0 98m

kube-system kubernetes-dashboard-5596bdb9f-7fht9 1/1 Running 1 99m

kube-system metrics-server-5f4c878d8-qcmzj 1/1 Running 0 99m

kube-system tunnelfront-54bc4895d5-djzrh 1/1 Running 0 99m

$ kubectl get ns

NAME STATUS AGE

default Active 100m

kube-node-lease Active 100m

kube-public Active 100m

kube-system Active 100m

That simple. Congratulations, you now have a complete AKS deployment in your Azure account!

Fortanix Setup

Similar to what we have done with DOKS, I’m going to check whether custom admission controllers can be registered on my new AKS deployment:

$ kubectl api-versions | grep -i admissionregistration admissionregistration.k8s.io/v1 admissionregistration.k8s.io/v1beta1

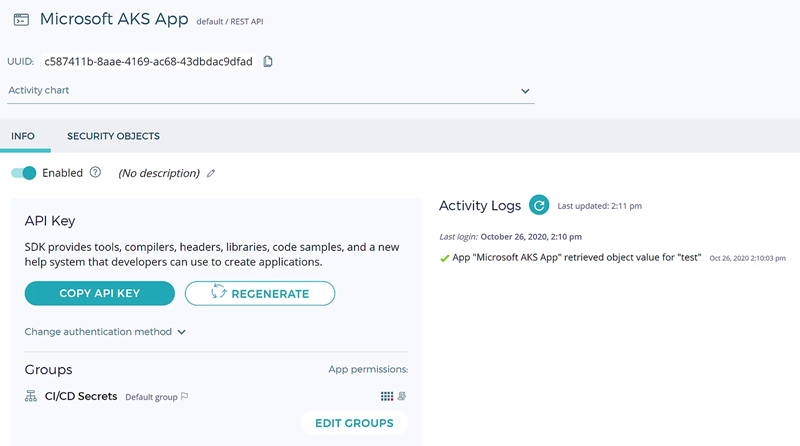

Similarly, with DOKS, I’ll configure an App within Fortanix DSM:

And I’ll need to download the installation package that includes all of the necessary files required to automatically create the service accounts, namespaces, webhooks and the containers required for the admission controller. This is available if you are registered via support.fortanix.com.

Once the package is downloaded, ensure the service-account.yaml file has the correct API key or the JSON Web Token details to ensure the webhook will use the correct credentials when the sidecar container communicates with DSM:

$ cat service-account.yaml # Service account used for the mutated pods apiVersion: v1 kind: ServiceAccount metadata: name: demo-sa namespace: <your namespace> automountServiceAccountToken: false --- # This secret holds credentials used by the secret-agent to authenticate to DSM

# Note that the secret name is the service account name with a suffix: -dsm-credentials

# This is how the controller associates the secret to the service account.

apiVersion: v1

kind: Secret

metadata:

name: demo-sa-dsm-credentials

namespace: <your namespace>

type: Opaque

stringData:

api_endpoint: https://dsm.fortanix.com

api_key: <api-key>

$ cat sidecar-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fortanix-webhook-config

namespace: fortanix

data:

controller-config.yaml: |

authTokenType: api-key # acceptable values: `api-key`, `jwt`.

secretAgentImage: ftxdemo/secret-agent:0.2

proxySettings:

#httpProxy: 'http://example.com'

#httpsProxy: 'https://example.com'

#noProxy: '*.example.com,1.2.3.4'

tokenVolumeProjection:

#addToAllPods: true

#audience: https://dsm.fortanix.com

#expirationSeconds: 3600

Helm is available, but for this guide, we’ll be deploying all the necessary components automatically via the script provided:

$ ./deploy.sh namespace/fortanix created creating cert... <some log output> ... created secret/demo-sa-dsm-credentials created

Check the namespaces are created automatically as per the script we created:

$ kubectl get ns NAME STATUS AGE default Active 107m fortanix Active 43s fortanix-demo Active 29s kube-node-lease Active 107m kube-public Active 107m kube-system Active 107m

Noting that the:

-

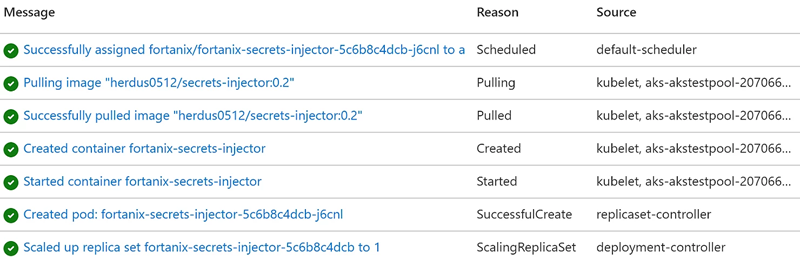

fortanix namespace contains the admission controller container (automatically pulled from the public repository from Fortanix) in the pod named fortanix-secrets-injector

-

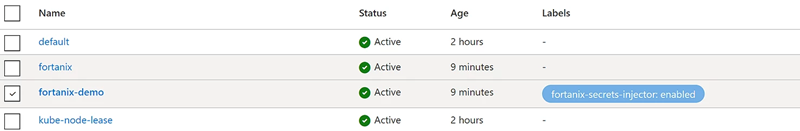

fortanix-demo namespace is created with the label of “fortanix-secrets-injector: enabled”. This is ensuring any pods running in that namespace will be automatically targeted for mutation (Azure Portal screenshot is below)

Double check the Fortanix DSM Secrets Injector pod (with the admission controller) is definitely available / ready:

$ kubectl get pods -A | grep -i fortanix fortanix fortanix-secrets-injector-5c6b8c4dcb-j6cnl 1/1 Running 0 22s $ kubectl -n fortanix logs fortanix-secrets-injector-5c6b8c4dcb-j6cnl 2020/10/26 02:49:14 Configuration: 2020/10/26 02:49:14 controllerConfigFile: /opt/fortanix/controller-config.yaml 2020/10/26 02:49:14 webServerConfig.port: 8443 2020/10/26 02:49:14 webServerConfig.certFile: /opt/fortanix/certs/cert.pem 2020/10/26 02:49:14 webServerConfig.keyFile: /opt/fortanix/certs/key.pem 2020/10/26 02:49:14 AuthTokenType: api-key 2020/10/26 02:49:14 SecretAgentImage: herdus0512/secret-agent:0.2 2020/10/26 02:49:14 Server listening on port 8443

Or alternatively check via the Azure Portal (very slick!) that the admission controller was properly deployed as well:

If you also navigate your way through the Azure Portal and go to namespaces, you’ll also see in the Azure Portal that a label of “fortanix-secrets-injector: enabled” has been set on the fortanix-demo namespace as with the CLI command:

Now we create a secret (or use one that is already available) within DSM:

"Fortanix promotes consistency as one of its major strengths across a multi-cloud environment..."

Specify a simple single container pod to be deployed within the namespace fortanix-demo:

$ cat single-pod.yaml

# This example shows how to use templates to render secret values

apiVersion: v1

kind: Pod

metadata:

namespace: fortanix-demo

name: test-demo-pod

annotations:

secrets-injector.fortanix.com/inject-through-environment: "false"

secrets-injector.fortanix.com/secrets-volume-path: /opt/myapp/credentials

secrets-injector.fortanix.com/inject-secret-test: "test"

spec:

serviceAccountName: demo-sa

terminationGracePeriodSeconds: 0

containers:

- name: busybox

imagePullPolicy: IfNotPresent

image: alpine:3.8

command:

- sh

- "-c"

- |

sh << 'EOF'

sleep 100m

EOF

And check the mutation worked and the secrets injected:

$ kubectl create -f single-pod.yaml pod/test-demo-pod created $ kubectl get pods -A | grep test-demo-pod fortanix-demo test-demo-pod 1/1 Running 0 40s $ kubectl -n fortanix logs fortanix-secrets-injector-5c6b8c4dcb-j6cnl | grep -i mutating 1 ↵ 2020/10/26 02:51:24 Mutating 'fortanix-demo/test-demo-pod' $ kubectl -n fortanix-demo describe pod test-demo-pod | grep -i injected Annotations: secrets-injector.fortanix.com/injected: true $ kubectl -n fortanix-demo exec test-demo-pod -- ls -la /opt/myapp/credentials/test -rw-r--r-- 1 root root 14 Oct 26 02:51 /opt/myapp/credentials/test $ kubectl -n fortanix-demo exec test-demo-pod -- cat /opt/myapp/credentials/test Hi I'm a Test!%

And it’s all there! You have successfully shared the same secret across your DOKS and your AKS environment!

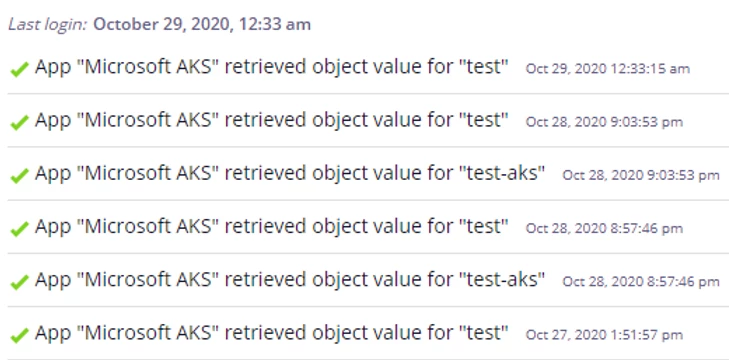

We can also see the secret was accessed by the newly created pod:

There are many other configuration variables you can set here. All information and configurable variables are available on Fortanix’s support website for more details.

For the next blog post on AKS, I’ll be spending time going through the use of DCsv2-Series instances (Intel SGX based Azure Confidential Computing service) to show the difference of having the admission controller and sidecar container running in a Secure-Enclave based infrastructure vs. a non-Enclave based infrastructure to further secure your secrets injection methods.

Summary about Fortanix

With Fortanix, developers can easily change their containerized applications such as databases, web proxies, secret vaults, or any application containers to confidential containers with a single click, in a matter of seconds, without any need to modify or recompile the application.

Get started with a Fortanix Confidential Computing Manager free trial account that will enable you to create confidential containers through an intuitive UI or REST APIs to create confidential containers.

You can find a Fortanix Quick Start guide here that will walk you through the process and additional documentation on the Fortanix integration with Microsoft Azure Kubernetes Service.

Feedback is always welcome and let me know your thoughts!

Cite this article

Cite this article