Our world is undergoing information “Big Bang”, in which the data universe doubles every two years, generating quintillions of bytes of data every day [1]. This abundance of data coupled with advanced, affordable, and available computing technology has fueled the development of artificial intelligence (AI) applications that impact most aspects of modern life, from autonomous vehicles and recommendation systems to automated diagnosis and drug discovery in healthcare industries.

Increasing AI adoption generates immense business opportunities, but with that comes incredible responsibility. AI’s direct impact on people has raised many questions about privacy, security, data governance, trust, and legality [2].

So, it becomes imperative for some critical domains like healthcare, banking, and automotive to adopt the principles of responsible AI. By doing that, businesses can scale up their AI adoption to capture business benefits, while maintaining user trust and confidence.

In this blog, through an example of AI-assisted Chest X-Ray Analysis for COVID-19 detection using a deep convolutional neural network (CNN), we demonstrate the combined potential of Confidential Computing and an AI Model Security to secure private healthcare data in use.

Further, we demonstrate how an AI security solution protects the application from adversarial attacks and safeguards the intellectual property within healthcare AI applications.

AI in Healthcare: Opportunities and Threats

Artificial intelligence (AI) applications in healthcare and the biological sciences are among the most fascinating, important, and valuable fields of scientific research. With ever-increasing amounts of data available to train new models and the promise of new medicines and therapeutic interventions, the use of AI within healthcare provides substantial benefits to patients.

For example, AI-based applications are used in digital pathology, MRI scan analysis, dermatology scans, and chest X-ray analysis, to name only a few contemporary use cases. However, as artificial intelligence evolves, new security threats are emerging that can challenge individual privacy.

It is even more critical for sensitive domains like health care and life sciences. Gartner [3] found that 41% of the organizations surveyed in 2021 had experienced AI attacks, out of which 60% faced insider attacks, and 27% were attributed to a malicious compromise of their AI infrastructure. However, the actual number of security incidents may be higher.

Furthermore, the benchmark HIMSS survey [4] in healthcare indicates that 27% of respondents reported some form of data theft or breach, and 10% reported malicious insider activity as the cause of significant security incidents. The survey report suggests that the threat actors' motivation was to steal patient information in 34% of cases and intellectual property in 12% of the cases covered.

HIMSS [5] emphasizes that "patient lives depend upon the confidentiality, integrity, and availability of data, as well as a reliable and dependable technology infrastructure."

This requirement makes healthcare one of the most sensitive industries which deal with vast quantities of information. These data are subject to privacy and regulations under various data privacy laws. Hence, there is a compelling need in healthcare applications to ensure that data is properly protected, and AI models are kept secure.

Ensuring Data Security with Confidential Computing

As a leader in the development and deployment of Confidential Computing technology [6], Fortanix® takes a data-first approach to the data and applications use within today’s complex AI systems.

Confidential Computing protects data in use within a protected memory region, referred to as a trusted execution environment (TEE). The memory associated with a TEE is encrypted to prevent unauthorized access by privileged users, the host operating system, peer applications using the same computing resource, and any malicious threats resident in the connected network.

This capability, combined with traditional data encryption and secure communication protocols, enables AI workloads to be protected at rest, in motion, and in use – even on untrusted computing infrastructure, such as the public cloud.

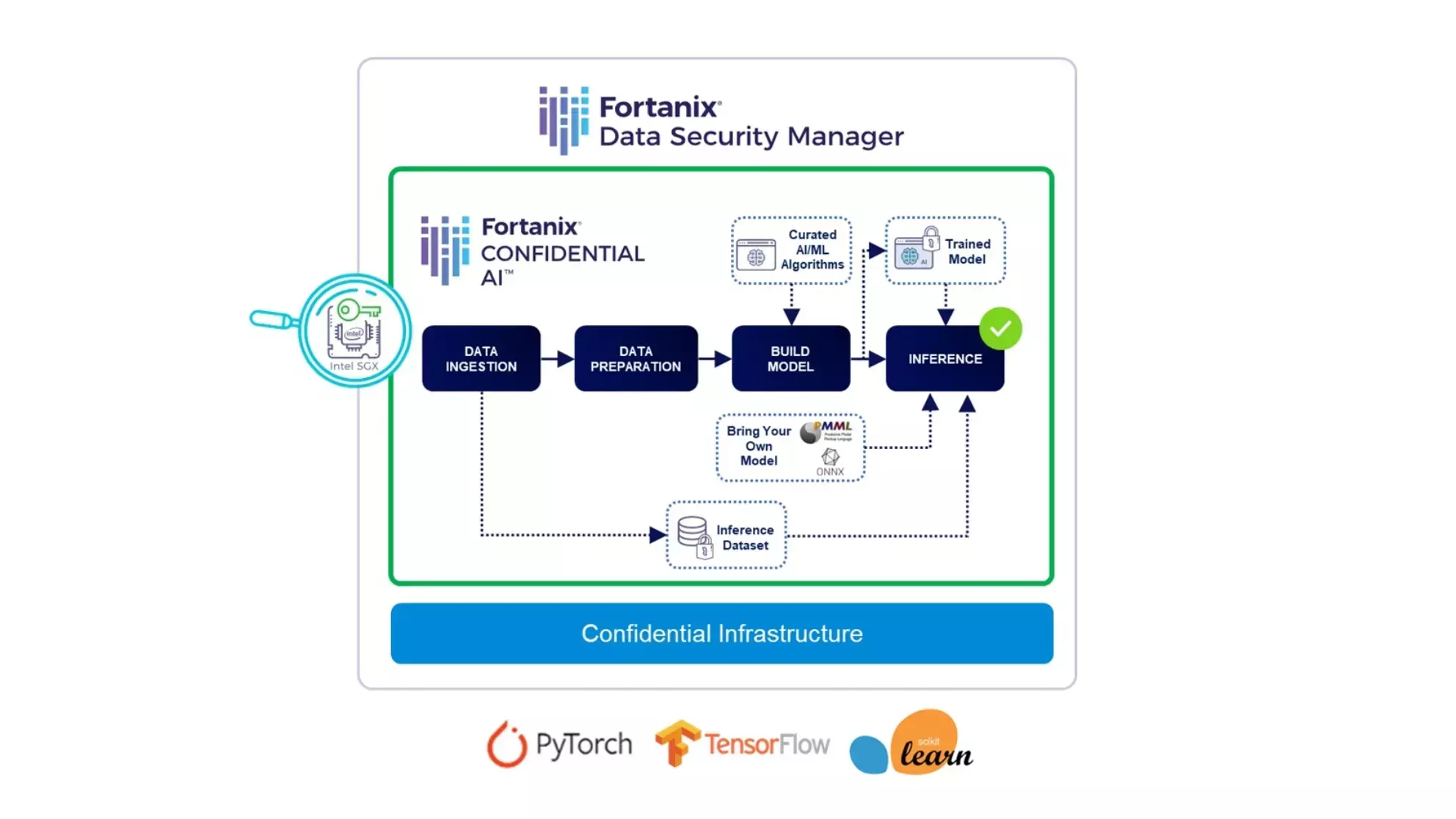

To support the implementation of Confidential Computing by AI developers and data science teams, the Fortanix Confidential AI™ software-as-a-service (SaaS) solution uses Intel® Software Guard Extensions (Intel® SGX) technology to enable model training, transfer learning, and inference using private data.

Models are deployed using a TEE, referred to as a “secure enclave” in the case of Intel® SGX, with an auditable transaction report provided to users on completion of the AI workload. This seamless service requires no knowledge of the underlying security technology and provides data scientists with a simple method of protecting sensitive data and the intellectual property represented by their trained models.

In addition to a library of curated models provided by Fortanix, users can bring their own models in either ONNX or PMML (predictive model markup language) formats. A schematic representation of the Fortanix Confidential AI workflow is show in Figure 1:

Figure 1: Secure AI workflows with Fortanix Confidential AI

Detect and remediate attacks with AI Model Security

Previous section outlines how confidential computing helps to complete the circle of data privacy by securing data throughout its lifecycle - at rest, in motion, and during processing.

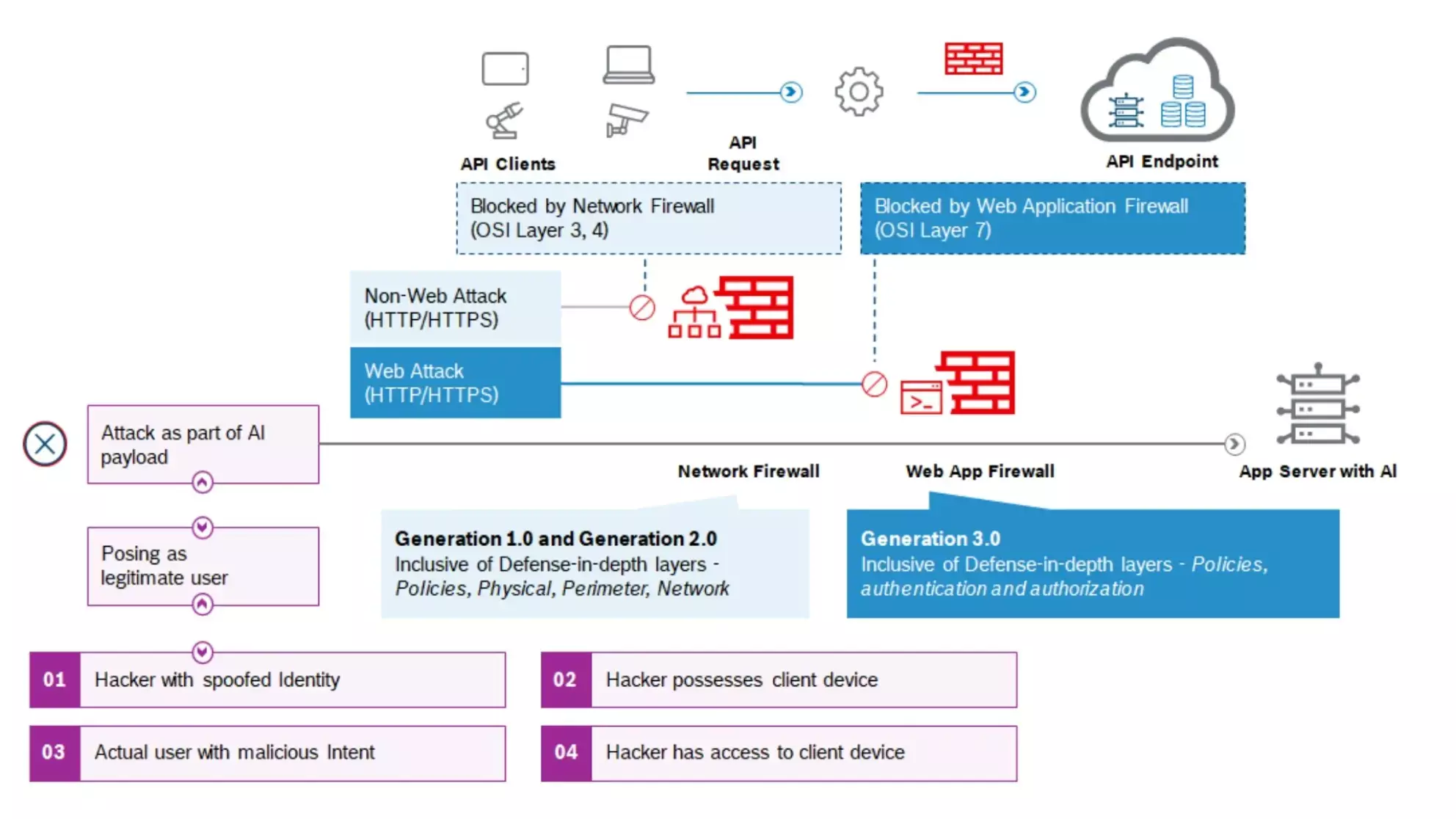

However, an AI application is still vulnerable to attack if a model is deployed and exposed as an API endpoint even inside a secured enclave.

By querying the model API, an attacker can steal the model using a black-box attack strategy. Subsequently, with the help of this stolen model, this attacker can launch other sophisticated attacks like model evasion or membership inference attacks.

What differentiates an AI attack from conventional cybersecurity attacks is that the attack data can be a part of the payload. A posing as a legitimate user can carry out the attack undetected by any conventional cybersecurity systems.

To understand what AI attacks are, please visit https://boschaishield.com/blog/what-are-ai-attacks/.

Figure 2: Ineffectiveness of traditional security measures on AI attacks

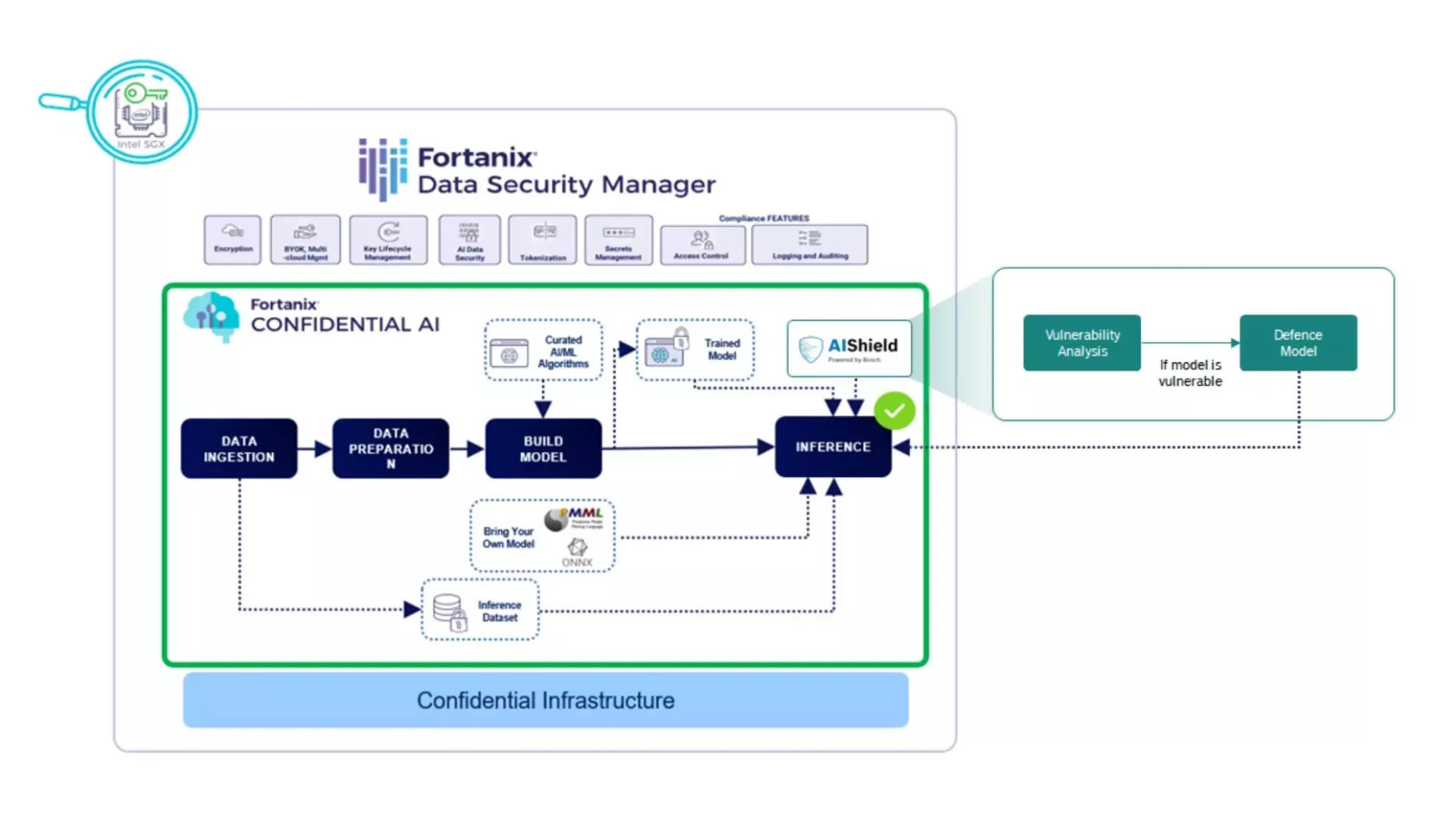

AIShield is a SaaS-based offering that provides enterprise-class AI model security vulnerability assessment and threat-informed defense model for security hardening of AI assets.

AIShield, designed as API-first product, can be integrated into the Fortanix Confidential AI model development pipeline providing vulnerability assessment and threat informed defense generation capabilities. The threat-informed defense model generated by AIShield can predict if a data payload is an adversarial sample.

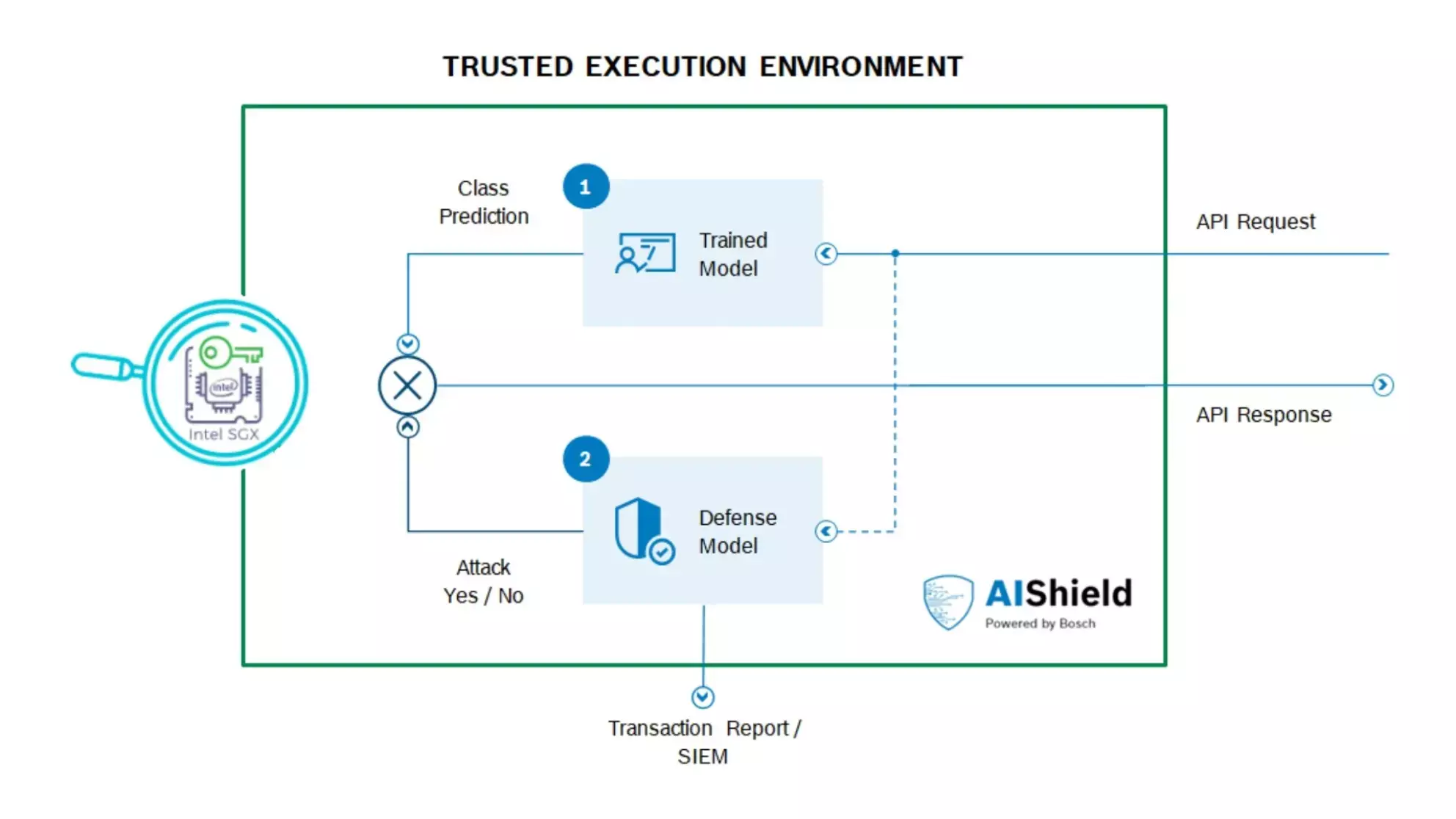

This defense model can be deployed inside the Confidential Computing environment (Figure 3) and sit with the original model to provide feedback to an inference block (Figure 4). This allows the AI system to decide on remedial actions in the event of an attack.

For example, the system can choose to block an attacker after detecting repeated malicious inputs or even responding with some random prediction to fool the attacker. AIShield provides the last layer of defense, fortifying your AI application against emerging AI security threats.

It equips users with security out of the box and integrates seamlessly with the Fortanix Confidential AI SaaS workflow.

Figure 3: AIShield Defense model within the Fortanix Confidential AI workflow (inside the Trusted Execution Environment)

Figure 4: Interaction between original and defense model

Conclusion

By leveraging technologies from Fortanix and AIShield, enterprises can be assured that their data stays protected, and their model is securely executed. The combined technology ensures that the data and AI model protection is enforced during runtime from advanced adversarial threat actors.

Using Fortanix Confidential AI with AIShield AI Security solutions, organizations can realize the complete potential of AI adoption without worrying about the vulnerabilities and data privacy concerns which have previously limited the growth opportunities available through artificial intelligence and machine learning (AI/ML) techniques.

References:

- https://www.brookings.edu/research/protecting-privacy-in-an-ai-driven-world/

- https://www.accenture.com/nz-en/services/applied-intelligence/ai-ethics-governance/

- https://blogs.gartner.com/avivah-litan/2022/08/05/ai-models-under-attack-conventional-controls-are-not-enough

- https://www.himss.org/sites/hde/files/media/file/2020/11/16/2020_himss_cybersecurity_survey_final.pdf

- https://www.himss.org/resources/himss-healthcare-cybersecurity-survey

- Confidential Computing Manager | Fortanix

- Related Read: Confidential Speech Recognition

Cite this article

Cite this article